Woman files for divorce after ChatGPT ‘exposes’ husband’s affair through coffee cup

ARTIFICIAL INTELLIGENCE

In a digital landscape increasingly shared with artificial intelligence, one woman's reliance on technology for personal matters has opened up essential discussions about trust, interpretation, and the consequences of our digital interactions.

Image: Feyza Daştan/Pexels

Artificial Intelligence (AI) is seamlessly integrated into our lives, whether it’s managing work tasks, controlling smart homes, or offering advice on parenting, fitness, and even mental health.

Platforms like ChatGPT have become trusted companions for many, solving everything from quick research queries to complex emotional dilemmas.

However, what happens when we place too much trust in AI?

One woman in Greece found herself at the centre of a media storm when she used ChatGPT in an unconventional way, asking it to interpret the coffee grounds at the bottom of her husband’s cup.

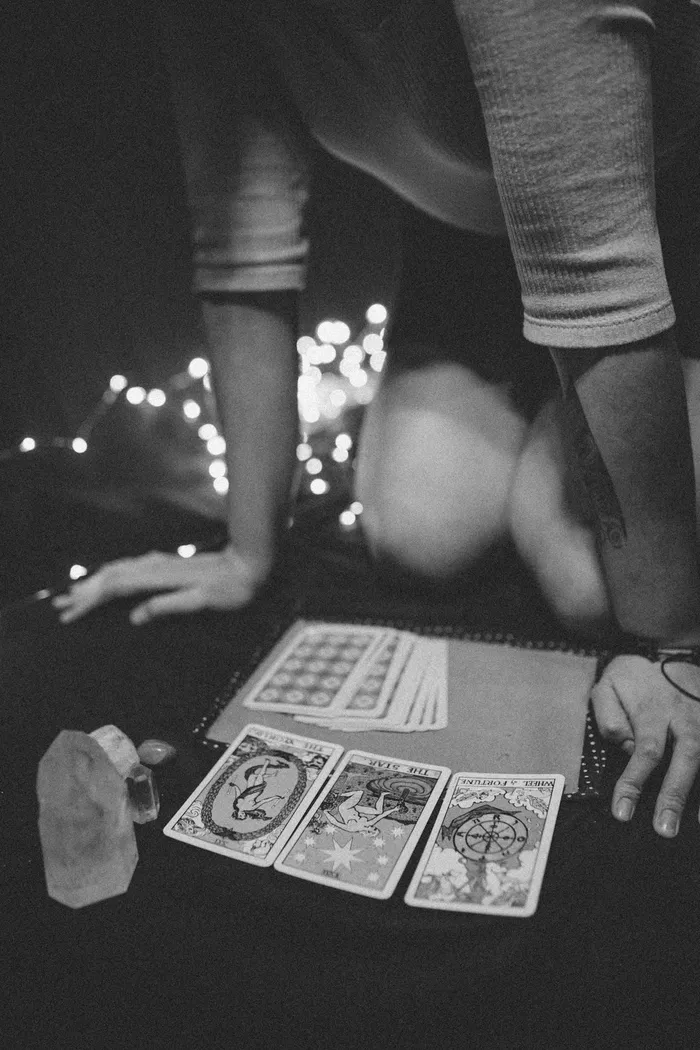

Known as tasseography, this ancient fortune-telling practice involves interpreting patterns in coffee grounds, tea leaves, or wine sediments to predict the future.

What followed was a dramatic chain of events that led to her filing for divorce, allegedly based on ChatGPT's "reading."

When fortune-telling meets AI

According to reports in the Greek City Times, the unnamed woman, a mother of two married for 12 years, uploaded a photograph of her husband’s coffee cup to ChatGPT, asking it to analyse the patterns.

The AI’s response was shocking: it suggested that her husband was considering starting an affair with a woman whose name began with the letter "E".

It went even further, claiming that the affair had already begun and that the "other woman" had plans to destroy their marriage.

For the husband, this was just another episode in his wife’s long-standing fascination with the supernatural.

For individuals already inclined to believe in fortune-telling or the supernatural, AI might appear as a modern, more credible alternative.

Image: Lucas Pezeta/Pexels

He claimed on the Greek morning show To Proino that she had previously sought guidance from astrologers, only to later realise their predictions were baseless.

But this time, her belief in ChatGPT’s "divine" analysis led to irreversible damage.

Despite his attempts to laugh off the situation, his wife took the accusations seriously.

Within days, she asked him to move out, informed their children of a pending divorce, and even involved a lawyer.

The husband, who denies any wrongdoing, is now contesting her claims, arguing that they are based on fictional interpretations by an AI and have no legal merit.

This bizarre story strikes at the heart of a growing concern: our increasing reliance on AI and its impact on how we perceive reality.

While tools like ChatGPT are designed to generate plausible responses based on the data they’ve been trained on, they don’t possess true insight, intuition, or the ability to divine the future.

The AI chatbot, for instance, wasn’t created to interpret coffee grounds or predict human behaviour.

It’s a language model that generates responses based on probabilities, influenced by the prompt it receives.

Yet, incidents like this highlight how easily people can misinterpret AI’s capabilities, especially when they lack a clear understanding of its limitations.

On Reddit, reactions to this story were a mix of humour and concern.

Some joked about AI taking over psychic jobs, while others expressed deeper fears about how tools like ChatGPT are blurring the line between reality and fiction.

One commenter aptly noted, “We’re going to see a wave of people whose ability to comprehend reality has been utterly annihilated by LLM tools.”

As AI continues to evolve, users must understand what these tools can and cannot do.

ChatGPT, for example, can provide thoughtful responses, summarise information, and even offer creative input.

But it is not omniscient, nor can it accurately predict human behaviour or events.

Moreover, this story underscores the psychological vulnerability that some people may have when interacting with AI.

For individuals already inclined to believe in fortune-telling or the supernatural, AI might appear as a modern, more credible alternative.

This misplaced trust can lead to significant emotional and relational consequences, as seen in this case.

As we continue to integrate AI into our daily lives, it’s essential to remember that these tools are not infallible.

They are here to assist us, not replace critical thinking, human intuition, or professional expertise.

And perhaps most importantly, they are not fortune-tellers, no matter how convincing their responses may seem.

Related Topics: